Imagine, you’re craving a coffee and ask a chatbot for recommendations. It goes beyond just listing cafes, it becomes your personalized coffee concierge. It tells you which shop is closest, who roasts the most delicious beans, and which cafe offers the perfect ambiance to match your mood. This is the power of generative AI.

It unlocks a transformative era where powerful algorithms analyze your data and create helpful, personalized experiences. But it doesn’t stop there.

Generative AI also reimagines information by analyzing existing data and generating new content: text, images, audio, video, and more.

From ChatGPT to DALL-E, Google Gemini, Microsoft Copilot, and many other latest classes of generative AI applications. We now have multiple generative AI models, where each of these models is trained to read across vast amounts of data such as text, images, audio & more which are fed into the system in advance.

These complex generative AI models then assemble solutions that answer the end user queries in the best possible way. Almost all of today’s generative AI foundation models are built on large language models (LLM) that add more diversity and versatility to the results. They are effectively trained to respond to natural language with added clarity.

As of the McKinsey & Company report released in June 2023, generative AI holds the potential to add a positive $6.1 to $7.9 trillion to the global economy by the year 2030. Thanks primarily to its contribution to increasing worker productivity.

However, it’s important to acknowledge that while generative AI offers numerous benefits in boosting working efficiency. It also presents challenges such as potential inaccuracy, privacy violations, and intellectual property exposure.

To understand generative AI more comprehensively, we will delve into its inner workings. Explore its most immediate applications and use cases, and share some compelling examples. We’ll also discuss best practices for its responsible and effective use.

So, without further ado, let’s explore everything about generative AI.

What is Generative AI?

Generative AI, as the name implies, refers to algorithms capable of rapidly creating content based on text prompts.

Different AI tools are available to produce various content forms.

For instance, DALL-E, a generative AI tool, excels at creating high-quality images based on user prompts. Similarly, Jasper helps craft long-form content precisely, while Copy.ai empowers social media influencers and management teams to generate engaging social media posts.

These are just a few examples of what’s possible with generative AI.

By interacting with these text-trained models conversationally. Similar to how you would talk to another person, you can unlock a wide range of content creation possibilities.

A Brief History of Generative AI

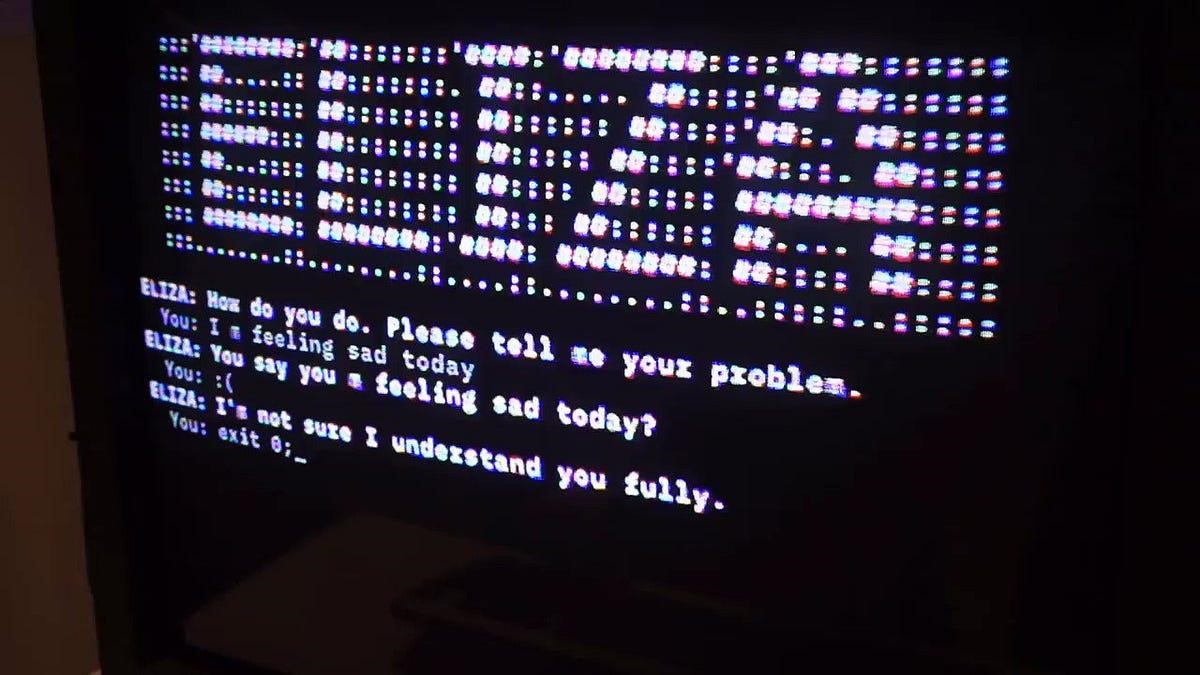

Eliza 1960:

It all started back in the mid-1960s when the first conversational chatbot was introduced. Eliza, an entirely largely rule-based model, was first created by the Massachusetts Institute of Technology. The responses were limited to a set of predefined rules and templates. Although it lacked contextual understanding, it set the foundation for the present-day generative AI models emerging today.

Figuratively speaking, AI models are like blank brains with complex neural networks exposed to vast amounts of real-world data that have been preloaded to the system. These systems then eventually develop intelligence – which further leads to generating novel content in response to pre-defined prompts.

Fast forward to today, even the best AI expert is unable to precisely define how these complex algorithms work and how a blank brain becomes developed & fine-tuned to respond back, even the most critical of queries asked.

The Inception of AI:

1956 marked a pivotal moment in the history of artificial intelligence (AI) with the Dartmouth Workshop. It was there that John McCarthy first proposed the possibility of machines exhibiting intelligent behavior. This groundbreaking discussion brought together several key figures in the field, including Marvin Minsky, Nathaniel Rochester, and Claude Shannon. These “marvels of Artificial intelligence“, as you aptly call them, were not just theorists; they were pioneers who actively shaped the future of AI through their contributions.

The Turing Test

The idea of machines mimicking human conversation isn’t new, dating back to the 1950s. However, it received a significant boost when Alan Turing, a pioneering mathematician, computer scientist, and logician – often called the “father of modern computing” and “artificial intelligence” – proposed the Turing Test. This test involves a human evaluator judging natural language conversations between a human and a machine designed to generate human-like responses. The Turing Test became a major driving force in the early development of Generative AI.

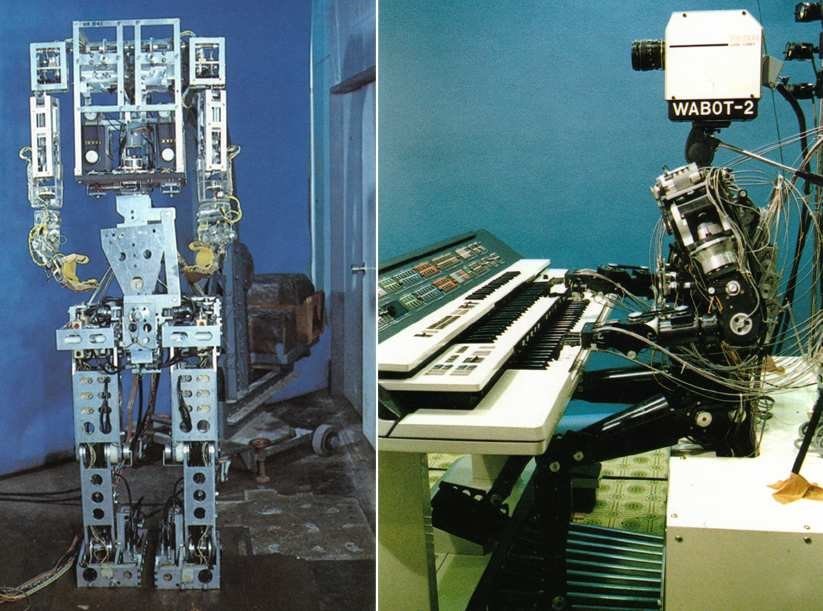

WABOT-1

In 1972, Japan made a significant leap in robotics with the unveiling of WABOT-1, the world’s first full-scale humanoid robot. Developed between 1970 and 1973 by Professor Ichiro Kato and his team at Waseda University. WABOT-1 represented a pioneering achievement in humanoid robotics.

This remarkable robot could communicate in Japanese, a groundbreaking feature for its time. Moreover, WABOT-1 had advanced capabilities for:

- Measuring distances and directions: WABOT-1 used external sensors to perceive its surroundings.

- Walking: Equipped with articulated lower limbs, it could move around its environment.

- Gripping and carrying objects: Tactile sensors in its hands enabled it to grasp and manipulate objects.

- Seeing: Two 525-line video cameras with a 35-degree field of view provided its “vision.”

The AI Winter

The field of artificial intelligence (AI) has experienced periods of both excitement and skepticism. One such period, known as the “AI winter“, spanned from the mid-1970s to the late 1990s. During this time, research funding and public interest in AI declined significantly.

Several factors contributed to the AI winter. Firstly, early AI projects often fell short of their ambitious goals, leading to disappointment and a reevaluation of AI’s capabilities. Secondly, limitations in computing power at the time hindered researchers’ ability to develop more sophisticated models. Finally, a lack of clear understanding about how to achieve true intelligence further dampened enthusiasm.

The consequences of the AI winter were significant. Reduced research activity slowed the pace of technological advancement in the field. Moreover, public skepticism about AI’s potential hindered its adoption in various industries. However, the field eventually emerged from the AI winter, fueled by advancements in computing power, new research approaches, and a renewed appreciation for AI’s potential benefits.

The AI Re-emergence

The year 2006 marked a turning point for artificial intelligence (AI) as it began to emerge in various practical fields. The financial sector witnessed significant advancements, with AI employed for tasks like fraud detection, credit risk assessment, and algorithmic trading. In the retail sector, recommendation engines were designed to guide consumers towards informed purchasing decisions.

Social media marketing transformed with the introduction of targeted marketing and effective customer segmentation, enabling businesses to reach their target audience more efficiently. AI’s reach further extended to the manufacturing industry, where it optimized internal operations, performed quality control checks, and implemented predictive maintenance.

The healthcare sector also embraced the potential of AI. Utilizing generative AI to enhance medical diagnosis, research new drugs, and provide personalized medicine for individual patients. Recognizing its vast potential, venture capitalists directed significant investments toward AI research and development within leading technology companies.

While the practical applications of AI were gaining traction, public interest was sparked by popular media portrayals. The 2006 film “Aeon Flux” showcased a fictional city named Monica, where advanced technology played a central role. The film’s depiction of automated security systems, centralized control, and AI-powered robotics provided a glimpse into how advanced technology could shape the future.

What Are Some Types of Generative AI Use Today?

Fast forward to the present era, AI has set the center stage. It has become an effective tool in almost all walks of life. Irrespective of which industry you’re in, AI is breaking ground almost everywhere. We see countless apps and software solutions that have a fully functional API making the tools work at the backend. But what sophisticated technology is working at the backend?

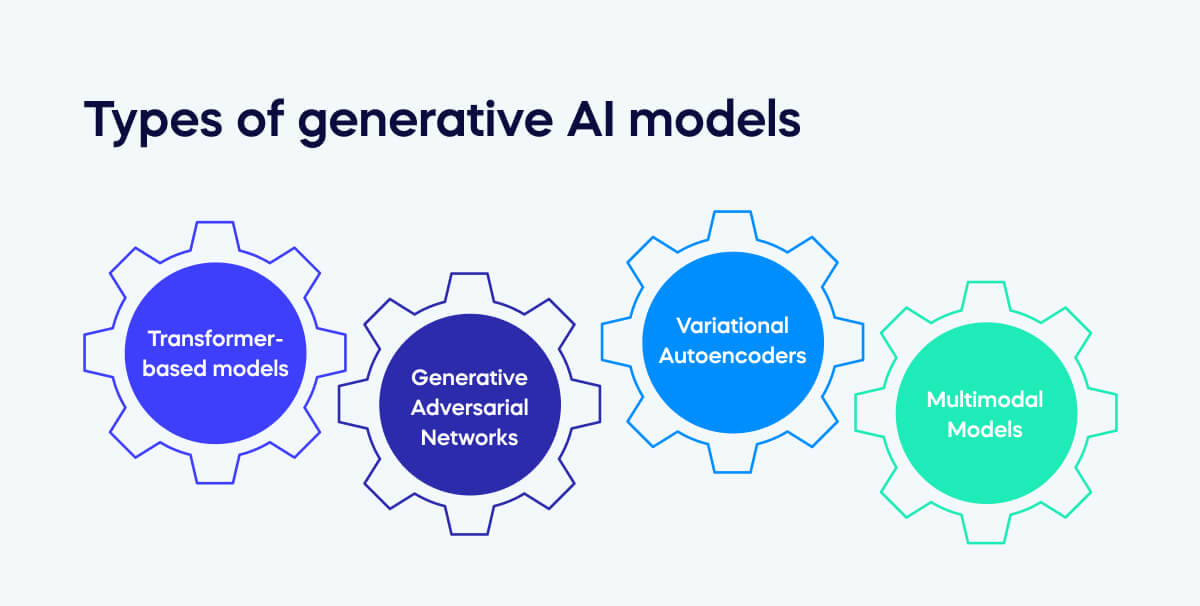

Let’s learn about the different deep-learning techniques which are included:

Transformer Models:

Transformers are neural networks capable of learning context by tracking and identifying relationships in sequential data. This data can be anything such as a word, a phrase, or even a sentence. Transformer models are mostly used in tasks that are dependent on natural language processing (NLP). It underpins most foundation models of many AI tools today.

Generative Adversarial Networks (GANs):

GANs work with two neural networking technologies, the generator and the discriminator. The generator is responsible for creating new content, whereas the discriminator differentiates between the real and the fake. As the generator keeps producing and the discriminator keeps separating facts from falsehood, it starts giving more fact-checked and realistic results.

Although GAN models are negatively used to create deepfakes mostly, the tool can be legitimately used for many business applications. Whether it’s art, video, or any other form of content creation, GAN can be truly useful.

Variational Autoencoders (VAEs):

While Transformers understand and manipulate sequences, and GANs create realistic data, Variational Autoencoders (VAEs) focus on learning the essence of a dataset. They achieve this by compressing data into a special code that captures the data’s core structure.

This code, unlike a standard autoencoder, allows VAEs to not just reconstruct the data, but also generate new, similar data points. This makes VAEs valuable for tasks like data compression, anomaly detection, and even creating new images or music.

By leveraging the power of these generative AI models, businesses are finding new ways to approach tasks and become more productive. One such example is that of Expedia; Expedia is one of the world’s most popular travel planning websites & apps which has integrated conversational AI assistant into its services.

So now customers don’t have to spend hours searching for the perfect location for their vacations. They can simply discuss with the chatbot by sharing their preference. The app automatically creates a well-segmented list of hotels and nearby attractions so it may assist them with the planning.

Similarly, the soft drink giant Coca-Cola has formed partnerships with companies like Bain & Company who use ChatGPT to assist them with marketing. Bain & Company utilizes the application to create personalized experiences for customers by crafting personalized sales ad copy, images & messaging. SnapChat has also built a conversational AI within their product, the bot called My AI can be called up for a chat at any time to answer questions or simply for entertainment purposes. Some of the suggested uses include “advising on the perfect gift for BFFs birthday, helping plan a hiking trip, or making a suggestion for dinner.”

Responsibilities Associated with Developing Solutions Using AI

AI has definitely created a multitude of opportunities to improve the lives of people around the world, may it be business, healthcare, or education. And with its launch, it has raised new questions in the market such as what is the best way to create a system that is solely built on fairness, privacy, safety, and interpretability.

Go for a Human-Centric Approach

Imagine you’re designing a brand-new app or website that uses advanced AI to make recommendations or decisions.

At the end of the day, what really matters is how actual users experience and interact with your system in the real world.

First things first, you need to build in clear explanations and user controls from the get-go. People want to understand why your AI is suggesting certain things, and they want to feel empowered to make their own choices. Transparency is huge for creating a positive experience.

Next, think about whether your AI should just spit out one definitive answer, or present a handful of options for the user to choose from. Nailing that single perfect answer is incredibly hard from a technical perspective. Sometimes it makes more sense to suggest a few good possibilities and let the user decide based on their unique needs and preferences.

As you’re developing the system, get ahead of potential downsides by modeling user reactions and doing live testing with a small group first. That way you can make adjustments before launching to the masses.

Most importantly, brings a truly diverse range of user perspectives into the process from day one. The more backgrounds and use cases you account for, the more people will feel that your AI technology is working for them, not despite them. A great user experience is all about making people feel heard, understood, and empowered.

Examine Your Raw Data

You know the old saying – garbage in, garbage out? Well, that applies big time when it comes to machine learning. The data you use to train your AI model is going to shape how it thinks and behaves. It’s kind of like parenting – the “inputs” you expose an AI to mold its worldview.

So before you even get started building a model, you need to do some serious digging into the raw data you’ll be using. Search it up for errors, omissions, or straight-up incorrect labels. Those data gaps could really throw your AI for a loop down the line.

Next up, make sure your training data actually represents the full range of real-world scenarios and users your AI will experience. If you only train it on data from 80-year-olds, it’s probably not going to generalize well to teens. Or if the data is just from summer conditions, good luck when winter rolls around.

As you’re developing the model, keep a hawk-eye out for any “training-serving skew” – basically, when performance in training looks great but goes sideways once deployed in the real world. Find out such gaps and rework your AI accordingly.

Oh, and one more key thing – look for any redundant or unnecessary data points that could be simplified. The simpler and leaner you can make your training data, the better your model is likely to perform.

Keep Testing Till You Get It Right

When it comes to building AI systems people can really trust, we could take some lessons from how software engineers ensure code quality and reliability.

It starts with putting every little piece of your AI through its paces with intensive testing, just like developers test individual code modules. But AI isn’t just one component – it’s a complex choreography of models interacting with each other and other software. So you’d need robust integration testing to catch any unintended code blocks from how those pieces fit together.

Another obstacle is what developers call “input drift” – when the data inputs start changing in unexpected ways from what the AI was originally trained on. You’d want monitoring and testing to raise a red flag anytime curveball inputs could throw your AI for a loop.

As your AI matures and your user base grows, your testing has to evolve too. Maintaining a regularly updated “golden” dataset representative of the real world is key to making sure your evaluations stay legit. And there’s no substitute for continually bringing in fresh user perspectives through hands-on testing cycles.

Blending comprehensive testing with a constant feedback loop from the real world? That’s how you develop AI systems that don’t just impress on paper, but prove their trustworthiness over years of use by real people.

Key Takeaways:

- Generative AI refers to algorithms capable of creating new content, such as text, images, audio, and video, based on existing data and user prompts.

- Transformer models, Generative Adversarial Networks (GANs), and Variational Autoencoders (VAEs) are some of the key types of generative AI models use today.

- Generative AI holds the potential to boost worker productivity and add trillions of dollars to the global economy, but it also presents challenges like potential inaccuracy, privacy violations, and intellectual property exposure.

- Developing responsible and effective generative AI solutions requires a human-centric approach, with clear explanations, user controls, and consideration for diverse perspectives.

- Examining and understanding the raw data used for training AI models is crucial, as the quality and representativeness of the data shape the model’s performance and behavior.

- Rigorous testing, including unit tests, integration tests, monitoring for input drift, and maintaining a representative “golden” test dataset, is essential for building trustworthy AI systems.

What’s the Future of Generative AI?

In the near term, generative AI models will become significantly more capable of understanding and responding to nuanced human instructions.

Anthropic’s constitutional AI research aims to instill models with stable values aligned with human interests. DeepMind is working on growing model capabilities while improving robustness and oversight.

This will enable generative AI to tackle increasingly complex creative and analytical tasks – generating marketing campaigns, software prototypes, architectural designs, and more from high-level prompts. Expect to see wider enterprise adoption for content creation, coding assistance, and streamlining knowledge work.

Longer-term, generative AI breakthroughs in areas like multimodal learning could lead to models that can learn from and generate all forms of data – text, images, audio, sensor data, etc. This could supercharge fields like robotics, autonomous systems, and simulation environments.

However, major challenges around bias, privacy, transparency, and controlling explicit content must still be addressed through improvements to model governance, watermarking, and aligning AI goal structures.

Regulation will likely increase, with the EU’s upcoming AI Act expected to enforce strict guardrails around high-risk generative AI use cases. The U.S. is also developing an “AI Bill of Rights“.

Despite hurdles, generative AI is projected to be a pivotal general-purpose technology, reshaping content, media, design, science, and beyond in the 2030s and 2040s.

Searching to get your hands on the transformative power of Generative AI for your business?

Reach out to Branex, a professional generative AI development company offering a team of experts who can guide you through the process of integrating AI into your products & services.

Want to learn more? Schedule a call with us.